Adam Schiff Married To - An Unexpected Look

Many folks, it seems, are quite curious about who Adam Schiff is married to. It's a natural thing to wonder about public figures, you know, what their personal lives are like, and who shares their journey. That kind of curiosity is pretty common, and it makes a lot of sense, really. People like to feel a bit connected, perhaps, to the individuals they see making big decisions or speaking out on important matters.

Yet, sometimes, a name like "Adam" can, in a way, pop up in places you might not expect. While we often think of specific individuals when we hear a name like that, there's also a whole other world where "Adam" means something completely different, something that's been quite a big deal in the world of technology and how smart computer systems learn. It's almost as if the name has a double life, if you will, depending on where you happen to hear it.

So, today, we're going to talk about an "Adam" that's less about personal connections and more about how machines get smarter, drawing from some fascinating insights. It's a bit of a twist, perhaps, but it's a story that, honestly, is just as compelling in its own right, especially if you're at all interested in how the digital world around us keeps getting better and better. We'll explore this other "Adam" and why it's so important.

- Blake Shelton Dad

- Sean Taro Ono Lennon

- How Old Is Gypsy Rose Now

- Chad Lowe Actor

- Anne Hathaway Net Worth

Table of Contents

- Who Is Adam Schiff Married To - The Name "Adam" and What It Means Here

- Is This "Adam" the One You're Thinking Of?

- What Makes "Adam" So Talked About?

- Understanding "Adam" - What's It All About?

- How Does "Adam" Work Its Magic?

- Why Is "Adam" a Go-To Choice for Many?

- "Adam" and Its Kin - A Family Affair

- What Sets "AdamW" Apart from "Adam"?

Who Is Adam Schiff Married To - The Name "Adam" and What It Means Here

When you hear the name "Adam," your mind might, quite naturally, go straight to someone like Adam Schiff, a well-known public figure. But, you know, in the wide world of, say, computer brains and how they learn, "Adam" means something entirely different. It's an abbreviation, actually, for something called "Adaptive Momentum." So, in this particular discussion, when we talk about "Adam," we're not, you know, talking about a person's spouse or their personal connections at all. We're talking about a very clever way that computer programs, especially those learning to do smart things, improve themselves over time. It's a method that helps them get better at their tasks, bit by bit, by adjusting how they learn. This "Adam" is, basically, a kind of behind-the-scenes helper for artificial intelligence, making sure it learns efficiently and effectively, which is pretty neat when you think about it.

Is This "Adam" the One You're Thinking Of?

You might be wondering, then, is that the "Adam" you were thinking of when you clicked on this? Probably not, honestly. This "Adam" isn't a person, but rather, a set of instructions, or an "optimizer," as the tech folks call it, that came into being back in 2014. It's a special kind of learning tool that combines a couple of really smart ideas. One idea is called "Momentum," which is kind of like building up speed as you go along, making sure your learning process doesn't get stuck in a rut. The other idea is from something called "RMSprop," which helps the learning process adjust its pace depending on how bumpy or smooth the learning path is. So, this "Adam" works by, more or less, figuring out the best way to change each little piece of information it's working with, making sure the entire system learns as smoothly and as quickly as possible. It's a very practical tool for anyone working with, you know, those big, complex learning systems.

What Makes "Adam" So Talked About?

So, what makes this "Adam" such a hot topic in its own field? Well, it's pretty well-known for being a go-to choice, especially when you're trying to teach those really big computer brains, like the ones that power chat bots or image recognition. It's, in some respects, the default option for training what we call "large language models" these days. People who work with these systems often find themselves trying to figure out the small differences between "Adam" and its slightly newer cousin, "AdamW." You see, while they share a lot, there are these subtle distinctions that can, actually, make a big impact on how well a computer program learns. It's like, you know, trying to pick the right tool for a very specific job, where even tiny variations matter a whole lot. This "Adam" has, basically, earned its reputation because it tends to be very reliable and pretty easy to use, which is a big plus when you're dealing with very complicated computer tasks.

- Jessica Osbourne Ozzy

- Julie Brady

- Allintitleyour Honor Showtime Cast

- Why Did Winona Ryder And Johnny Depp Break Up

- Hallie Eisenberg Movies

Understanding "Adam" - What's It All About?

Alright, let's get a bit deeper into what this "Adam" really is and why it's so important for computer learning. It's, truly, a method that helps computer programs learn from their mistakes, making adjustments little by little. Think of it this way: when a computer program is trying to learn something, it often has to make a lot of small adjustments to its internal settings. "Adam" helps it figure out how much to adjust each setting, and in which direction, to get better at its task. It's kind of like having a really smart coach that tells you exactly how to tweak your technique after every practice session. This "Adam" does this by looking at two main things: the average of how much things have changed in the past, and how much those changes have, you know, bounced around. By keeping track of both, it can make very sensible adjustments, ensuring the learning process is steady and efficient. It's a very clever approach, honestly, that has made a huge difference in how quickly and effectively computer systems can learn.

How Does "Adam" Work Its Magic?

The way "Adam" works its magic is, in a way, by combining two powerful ideas. One idea, "Momentum," is all about using past movements to keep things going in the right direction. Imagine pushing a heavy cart; once it gets moving, it's easier to keep it going. Similarly, "Momentum" in "Adam" uses the accumulated history of changes to help the learning process move more smoothly and quickly towards its goal, which can, you know, really help it avoid getting stuck. It tends to reduce the wobbling or "oscillation" that can happen during learning, helping the system get to its best state faster. Then there's the "RMSprop" part, which is about adapting the learning speed for each individual piece of information. It, basically, keeps a record of how much each part of the system's "gradient" (which is like its direction of change) has been swinging back and forth. If a part is swinging a lot, "Adam" might slow down its learning for that part, and if it's barely moving, it might speed it up. This self-adjusting ability is, really, what makes "Adam" so effective, allowing it to fine-tune every little setting just right.

Why Is "Adam" a Go-To Choice for Many?

So, why is "Adam" considered such a popular choice among those who, you know, build and work with smart computer systems? Well, it's pretty much because of its solid performance and how straightforward it is to use. Many algorithm engineers and researchers, when asked about their favorite tool for teaching computers, will, apparently, often say "Adam." It's been a sort of "standard" for deep learning for many years now, moving from things like recognizing pictures (CV) to understanding language (NLP). Its inventors, Kingma and Lei Ba, introduced it back in December 2014, bringing together the best parts of earlier learning methods like AdaGrad and RMSprop. "Adam" looks at both the average direction of change (what they call the "first moment estimation") and how much those changes vary (the "second moment estimation"). By calculating a kind of moving average for both of these, it then uses those averages to figure out how to update the computer's settings. This approach, you know, makes it incredibly stable and reliable, which is a huge advantage when you're dealing with very complex learning tasks that can, otherwise, be quite tricky.

"Adam" and Its Kin - A Family Affair

Just like any popular idea, "Adam" also has, you know, a bit of a family tree. While "Adam" itself is a fantastic tool, there are variations and improvements that have come along. It's like, you know, having different versions of a favorite recipe, each with its own little twist. Understanding these different versions can, actually, help folks pick the very best tool for their specific needs. This "Adam" is, in essence, a method that helps computer programs learn by looking at the average direction of their learning steps and how much those steps tend to bounce around. It uses these insights to make sure the learning process is both steady and efficient. This focus on "first moment" (the average direction) and "second moment" (the variability of those steps) is, basically, what gives "Adam" its clever edge, allowing it to adapt its learning speed for each individual part of the system. It's a pretty smart way to make sure that even very large and complex computer models can learn effectively and efficiently, which is, truly, a big deal in the world of artificial intelligence.

What Sets "AdamW" Apart from "Adam"?

One of the most talked-about relatives of "Adam" is, apparently, "AdamW." This version is, in fact, the one that's typically used by default when training those really big language models we mentioned earlier. Many resources out there don't, you know, always make the differences between "Adam" and "AdamW" super clear, but there are some important distinctions. The core idea of "AdamW" is that it handles something called "weight decay" in a different, arguably better, way. In simple terms, "weight decay" is a technique used to prevent computer models from becoming too specialized in what they learn, helping them to be more general. "AdamW" separates this "weight decay" from the main learning process of "Adam," which can, basically, lead to better performance, especially with very large models. It's a subtle change, perhaps, but one that has been shown to be quite effective in practice, making "AdamW" a preferred choice for many advanced learning tasks. So, while they share the same family name, "AdamW" has, in some respects, refined the process to get even better results, particularly for those massive computer brains.

- Tara Reid 2024

- How Many Times Has Emily Compagno Been Married

- Venus Williams Parents

- Kanchana Chandrakanthan

- September 16 Zodiac

When was Adam born?

Adam Sandler net worth - salary, house, car

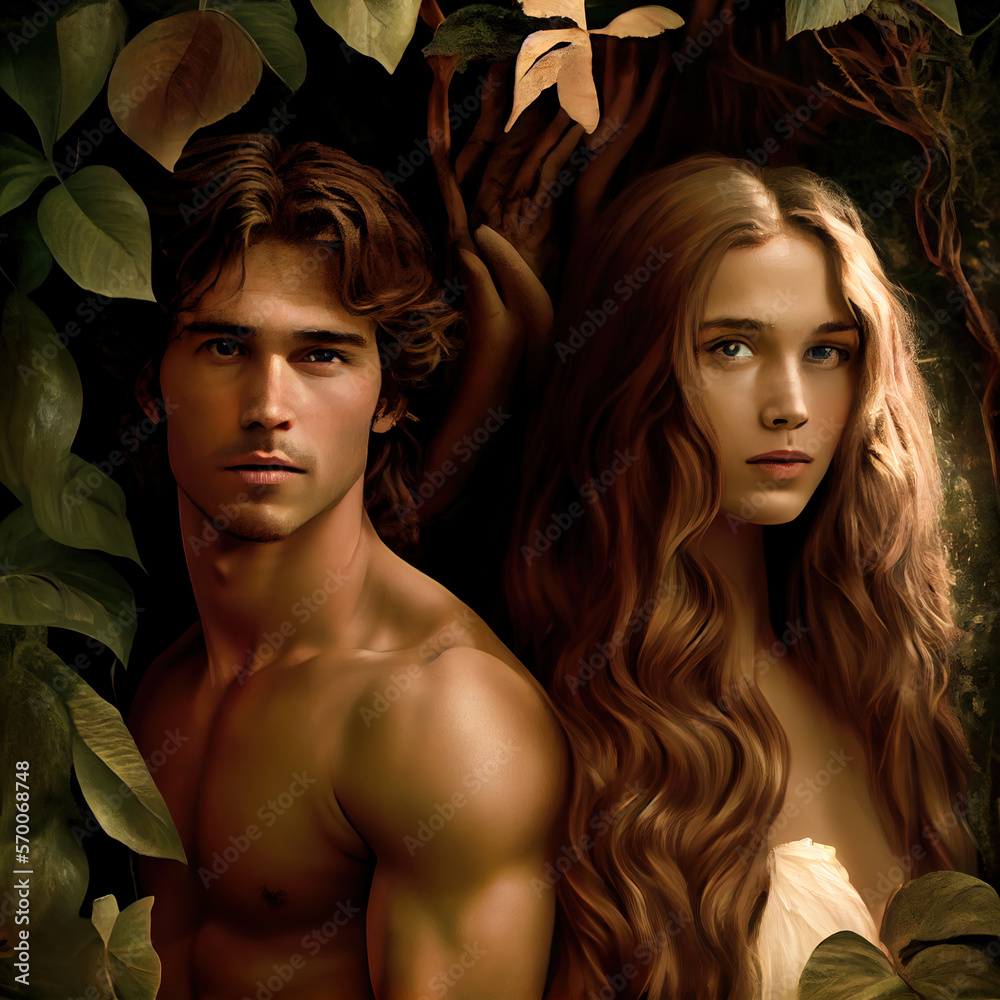

man, woman and the forbidden apple, Adam & Eve concept, artists